CUDA C/C++ on Google Colaboratory

What is CUDA?

Compute Unified Device Architecture is a parallel computing platform and programming model developed by Nvidia for general computing on its own GPUs. CUDA enables developers to speed up compute-intensive applications by harnessing the power of GPUs for the parallelizable part of the computation.

CUDA code doesn’t run on AMD CPU or Intel HD graphics unless you have a NVIDIA Hardware installed in your Machine.

What is Google Colab?

Google Colab is a free cloud service and the most important feature able to distinguish Colab from other free cloud services is; Colab offers GPU and is completely free! With Colab you can work on the GPU with CUDA C/C++ for free!

Does that mean not every one can use it? Not really, we can use other resources for that like Google Collaboratory So, let’s start with that!

Procedure:

- Go to https://colab.research.google.com in Browser and Click on New Notebook

- Switch runtime from CPU to GPU:

Click on Runtime > Change runtime type > Hardware Accelerator > GPU > Save

- Completely uninstall any previous CUDA versions.We need to refresh the Cloud Instance of CUDA:

!apt-get --purge remove cuda nvidia* libnvidia-*

!dpkg -l | grep cuda- | awk '{print $2}' | xargs -n1 dpkg --purge

!apt-get remove cuda-*

!apt autoremove

!apt-get update*Write code in a separate code Block and Run that code.Every line that starts with ‘!’, it will be executed as a command line command.

- Install CUDA Version 9:

!wget https://developer.nvidia.com/compute/cuda/9.2/Prod/local_installers/cuda-repo-ubuntu1604-9-2-local_9.2.88-1_amd64 -O cuda-repo-ubuntu1604-9-2-local_9.2.88-1_amd64.deb

!dpkg -i cuda-repo-ubuntu1604-9-2-local_9.2.88-1_amd64.deb

!apt-key add /var/cuda-repo-9-2-local/7fa2af80.pub

!apt-get update

!apt-get install cuda-9.2- Check your CUDA installation by running the command given below :

!nvcc --version

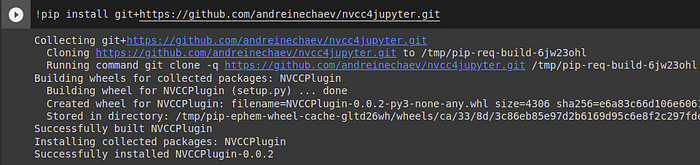

- Install a small extension to run nvcc from the Notebook cells:

!pip install git+https://github.com/andreinechaev/nvcc4jupyter.git

- Load the extension using the code given below:

%load_ext nvcc_plugin

Now we are ready to run CUDA C/C++ code right in your Notebook.

- Checking if CUDA is working or not:

To run the code in your notebook, add the %%cu extension at the beginning of your code.

%%cu#include <stdio.h>

#include <stdlib.h>__global__ void add(int *a, int *b, int *c) {

*c = *a + *b;

}int main() {

int a, b, c;// host copies of variables a, b & c

int *d_a, *d_b, *d_c;// device copies of variables a, b & c

int size = sizeof(int);// Allocate space for device copies of a, b, c

cudaMalloc((void **)&d_a, size);

cudaMalloc((void **)&d_b, size);

cudaMalloc((void **)&d_c, size);// Setup input values

c = 0;

a = 3;

b = 5;// Copy inputs to device

cudaMemcpy(d_a, &a, size, cudaMemcpyHostToDevice);

cudaMemcpy(d_b, &b, size, cudaMemcpyHostToDevice);// Launch add() kernel on GPU

add<<<1,1>>>(d_a, d_b, d_c);// Copy result back to host

cudaError err = cudaMemcpy(&c, d_c, size, cudaMemcpyDeviceToHost);if(err!=cudaSuccess) {

printf("CUDA error copying to Host: %s\n", cudaGetErrorString(err));

}printf("result is %d\n",c);// Cleanup

cudaFree(d_a);

cudaFree(d_b);

cudaFree(d_c);return 0;

}

and YOU DID IT!